My old file server is Full. So it's time to build a new one.

All images are cc-by-sa.

As last time, I used one of the RM424 cases from xcase.co.uk;

this time it's an "RM 424 Pro" with a SAS expander built into the

backplane. The front holds 24 hot-swap drive bays. It doesn't have a

USB socket any more, which is a shame, but given

the things one can do with USB these days

I probably shouldn't be plugging foreign USB devices into my machines

anyway.

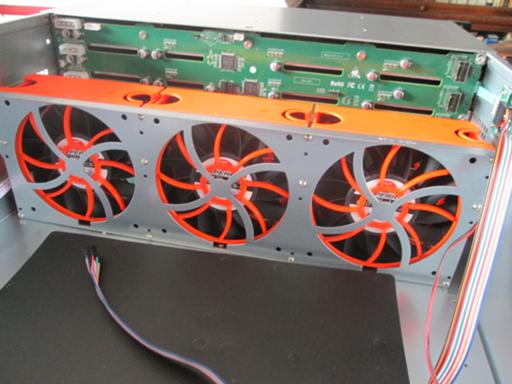

Behind the backplane is the fan wall. (These can now be lifted out,

which makes life much easier when running cables down the sides.)

At the back is a fairly conventional mainboard enclosure.

It comes with a variety of power-supply blanking plates, two 2.5"

drive mounting plates (see later) and a variety of screws; XCase also

supplied a Seasonic 750W power supply with plenty of connectors.

I also used (from top to bottom):

- a HighPoint Rocket 2720SGL hard drive controller

- 8 4T Toshiba hard drives (to start with)

- a Xeon E3-1231 (Haswell, quad core)

- 32G of DDR3 ECC memory

- two 8087-8087 cables

- two Sandisk 64GB SSDs

- a Supermicro X10SL7-F mainboard

That black rectangle is actually an insulating layer between mainboard

and case, presumably to stop any solder dags on the underside from

becoming earthed. Can't say I've ever had a problem, but it shouldn't

do any harm.

The full setup. (Before cable tying, because it's clearer what's going

on this way.) Power from the PSU runs forward to the backplane and

feeds the drive cage; fan headers from the cage feed the three fans,

and put their speed under software control, which is good, because at

full speed they're louder than the old ones. The two SFF8087 cables

come off the HighPoint controller and run forward to supply data to

the backplane. The SSDs, which serve as the root filesystem (RAID-1),

are mounted to the side of the case, so that I can fill the drive cage

with drives to feed the storage array.

The only problem was that the power button didn't work. But I wired

the reset button (which I'll basically never want to use) onto the

power pins, and that's fine.

So it's all fired up, and reporting 29T free (8 x 4T in raidz2 should

be 24T, which would seem more plausible, but zfs does this). It'll

take more than a week to copy everything over: yes, I could take

down the old fileserver and move the drives into the new machine, but

the old fileserver isn't dying, just full, and I'd rather maintain

continuity of service during a longer copying procedure than have a

few hours of outage.

Ultimate target capacity is around 72T: I could use bigger drives, but

I couldn't find anything more than 4T that wasn't

SMR and

while ZFS is pretty good at recovering from errors I'd rather not put

it to that test.

pyromachy: the use of fire in combat or warfare.

- Posted by Owen Smith at

01:48pm on

16 September 2015

Not many expansion slots in the motherboard, only two. I assume you're not planning to put expansion cards in this server?

My other thought was that 64GB for the root filing system is rather small, then I remembered it's unix not Windows.

- Posted by RogerBW at

01:59pm on

16 September 2015

The plan is that the only card ever to go into that mainboard is the hard drive controller. There's no need for expansion cards; the blessed thing has three network ports, VGA and serial already! (And a bunch of USB sockets.)

As for software, I have a post in progress talking about building a fileserver in more detail, but basically all it needs to run apart from the kernel is:

- sshd

- zfs

- file servers (http, smb, nfs servers)

- mpd for audio streaming

The old file server has reached 2.8 gig with all the various stuff I've put on there. I only got 64 gig SSDs because I couldn't find anything smaller.

- Posted by PeterC at

09:04am on

17 September 2015

You've gone to the expense of a separate SAS HBA instead of using the onboard controller. Is there any reason for this? I believe that it'd work fine with the application of a reverse-breakout cable such as http://www.xcase.co.uk/cables/x-case-reverse-breakout-cable-mini-sas-backplane-to-motherboard-10-00-x-case.html, however, when I asked X-Case their opinion, I was told it wouldn't work because the motherboard had the wrong connector, and was then recommended a Xeon E5 system instead! I can't help thinking that I got through to a commission-driven salescritter rather than somebody who can spell SAS.

29T is "correct" for "zpool list" and similar. raidzN vdevs report the raw capacity, as opposed to mirrored vdevs which report the size of the largest disk, and it uses those silly power-of-1024 units. 8x4TB is 29.10TiB. (Your disks will actually be slightly larger than 4TB due to IDEMA standardisation of disk capacities for RAID arrays, but not enough to knock these numbers out.)

The amount of usable space is more Interesting. Upstream Sun/Oracle ZFS will only let you use 63/64 of the disk, hence why your zpools are only ~98% full when you get ENOSPC. 6x4TBx63/64 is 21.49TiB which is what "zfs list" should report. However, the latest OpenZFS seems to have changed this to 62/64 as suggested by it saying "Default reserved space was increased from 1.6% to 3.3% of total pool capacity" in https://github.com/zfsonlinux/zfs/releases/tag/zfs-0.6.5. Given that performance does drop like a stone at about 97% full, this is perhaps a good design decision, but it did give me a nasty surprise when I imported a 98% full Linux-created zpool into FreeBSD 10.2. It probably shouldn't have been the ZoL docs that tipped me off as to what happened.

So in proper units, you should get exactly 23.63TB out of your 8x4TB array, or 23.25TB if you're using a version with increased reserved space. That should keep you going for a while :)

One reason to upgrade to ZoL 0.6.5 — I had to build from source as they've forgotten Debian exists — is that you get feature@large_blocks so you can immediately have recordsize=1M. Increasing the block size like this gives compression something to get its teeth into, and also provides a commensurate reduction in the number of blocks, so dedup becomes practical as the DDT is so much smaller. The trade-off is that small updates within files are much slower due to larger read-modify-write operations. This might not seem worth it when you're staring at acres of empty terabytes, but a bit of testing may reveal that it wins you another terabyte which will be nice when the array is getting full. For space maximalists, there are a couple of kernel module options to give recordsize=16M and reduce the reserved space, but the performance trade off may be too much.

I've tried recordsize=1M and dedup on FreeBSD with ~3TB of test data and it worked fine, albeit a bit sluggish as I was doing it in a VM with slow disk access. I've yet to give ZoL 0.6.5 a good hammering on these settings.

- Posted by RogerBW at

09:25am on

17 September 2015

I already had the HBA (because the previous fileserver doesn't have the SAS expander, needs 6 8087 sockets for full capacity, and three two-socket cards was the cheapest way to do it - but I didn't end up fully populating that machine). Yes, I think a reverse breakout cable ought to work on this mainboard.

I've no objection to using TiB as long as they're labelled TiB. Which Linux software is increasingly managing to do, though it's not all there yet.

This array is only an upgrade from 3TB to 4TB discs, so in nominal TB from disc that's only 6TB more.

Only 6TB. Truly we are living in the end times.

The next stage is to add another 8×4TB as tranche 2, but that's another large chunk of money. And what I'd really like to do is add them to the old server, thereby have full backups, and then add another set to the new server. Then I can maintain a complete duplicate set of files on another machine, and feel confident doing upgrades and so on.

I'll probably wait for the 0.6.5 Debian package before I upgrade. What can I say, I'm lazy.

- Posted by Owen Smith at

01:19pm on

17 September 2015

I had to look up possible meanings of 8087, it still means floating point to me but that didn't make sense having so many of them. I had no idea it's a high density SAS backplane connector.

Only two USB3 ports on the new motherboard, one of which you don't have because it's for the front panel? Seems a very low number to me. My new work PC has 10 USBs (4 front 6 rear) of which only 4 are USB3 (2 front 2 rear) which again seems low. Why are motherboards being so stingy on the USB3? I've nearly run out at work already.

- Posted by RogerBW at

01:29pm on

17 September 2015

Yeah, I know. Technically it's SFF8087, for small form factor, but that rarely gets used.

I don't expect ever to use the USB ports for anything except hooking up a keyboard for emergency fixes (which is also how I did the initial installation, with keyboard and USB mass storage device). This board is not intended for desktop use.

- Posted by RogerBW at

05:01pm on

18 September 2015

A good time to be lazy.

Comments on this post are now closed. If you have particular grounds for adding a late comment, comment on a more recent post quoting the URL of this one.