In part 2 of this series on building a file server, I'll talk about

hardware selection.

I tend to make the big storage array completely separate from the

main operating system drive; when I started doing this there was

little ZFS support in grub, so it made booting much easier. I run the

operating system off SSD (well, actually a pair of SSDs in RAID-1);

160GB is plenty, and those (from Sandisk) were the smallest I could

buy at the time, so that's what I've used.

You know how many discs you want. What are you going to put them into?

Even eight-bay chassis aren't all that easy to come by, and you may

find yourself using bay adaptors (e.g. squeezing three 3½" drives into

two adjacent 5¼" bays) and other such fixes. I've been very happy with

my 24-bay cases from X-Case, 4U rackmounts

which have 24 hot-swap bays against a backplane which simplifies power

and data connections. (Some of these come with port expanders so that

you only need eight controller channels to run 24 discs; that's

cheaper but slower than getting a full 24-port controller.) As always,

consider power and cooling, bearing in mind that discs packed closely

together can get quite hot.

Some of the X-Case boxes have brackets to hold the SSDs; or you can

bodge your own.

You'll need controllers for these discs. For a small system, you can

use the controllers supplied on the mainboard; remember to allow for

the operating system drives. Mainboards with ten SATA controllers tend

to be expensive and specialist items, and you already want a mainboard

and CPU that support ECCRAM - really, you do, you're doing checksums

on your data with this stuff - and lots of it, as much as you can

cram on. I've usually ended up with Xeon CPUs; I have 32GB RAM on the

current hardware iteration, and 64 would be better.

For a bigger array than the mainboard can support directly, two of the

mainboard channels go to the mirrored SSDs, while the big array is

driven off an interface card. (This helps keep things separate at a

bus level, leading to less confusion if you're trying to repair the

array in a hurry.)

6Gb/s SATA discs really want one PCI Express lane per drive to run at

full speed, so a controller that's running eight drives should be in a

×8 slot; it'll probably work in a slower slot, but you'll be giving up

performance. (A 24-port in a ×16 slot seems to be fine.)

The first controller I used was an AOC 8-port SATA card using standard

sockets. (Looking at the design, it had clearly been upgraded from an

earlier 6-port version.) That worked very well, but even with eight

discs the wiring had a tendency to get out of control.

More professional controllers have "8087" connectors, each of which

carries four SATA data channels; this will be connected either to

another 8087 on the backplane, or to a "breakout" cable that feeds

individual discs. (Note that a "reverse breakout" cable, to combine

four individual SATA port controllers into one 8087 connector going to

a backplane, is wired differently.) I've had reasonable success with

the HighPoint Rocket 2720SGL (now EOL, but you can still get them off

Amazon), with two 8087 ports to control eight drives, though there was

no support for it in the FreeBSD 9 kernel. Linux had absolutely no

problem with it.

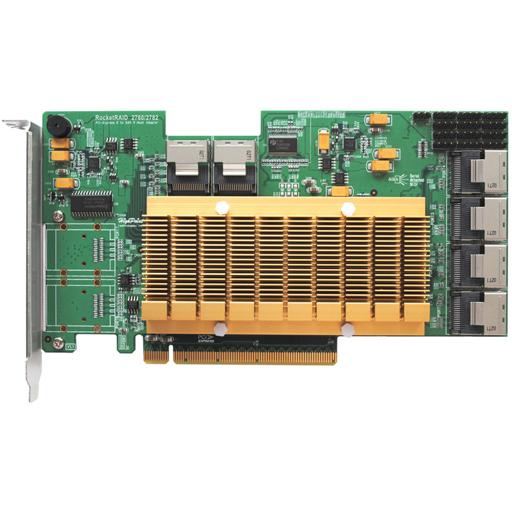

Or if you're getting really serious and want 24 discs… you can in

theory gang together three 8-port controllers, but in practice getting

a mainboard with three ×8 slots tends to be difficult, so bite the

bullet and get a 24-port controller. I'm now using a HighPoint 2760A

in each of my active servers.

Absolutely do not buy a hardware RAID controller. This is going to be

done with software, so you need a controller that lets you address the

discs individually (often known as JBOD mode, Just a Bunch Of Discs);

hardware RAID adds hugely to the cost, and causes trouble down the

line when the controller goes bad and you can't find a replacement. If

one of these controllers goes bad, you can plug in any other cheap

JBOD controller and it'll still work.

It would be nice to have battery backup, to complete disc writes in

case of power failure, but it tends to be stupidly expensive.

I've had enough bad experiences with Adaptec controllers that I now

won't buy them even if they offer a JBOD one. (Even their JBOD modes

have a habit of putting proprietary gubbins on the disc so that

nothing else can read it.)

Don't forget that each disc needs a power connection too (again,

backplane configuration may make this easier). Get a grunty PSU, but

more importantly one where you can get enough power feeds out without

having to use lots of splitters.

Comments on this post are now closed. If you have particular grounds for adding a late comment, comment on a more recent post quoting the URL of this one.